Parts Identification from Photos

Agentic automation that auto-identifies 80% or more of photographed parts with 90% or greater accuracy on SKU matching, processing images under 30 seconds each with 50 concurrent jobs, enabling visual and keyword search across all processed photos with full audit updates to ERP, FSM, and inventory systems.

Challenge

Field technicians and warehouse staff photograph parts during service calls, inspections, and inventory audits but must manually cross-reference catalogs, manuals, and systems to identify SKUs, specifications, and compatibility. Each identification takes 10–20 minutes and invites errors. Photos sit across mobile devices, shared drives, ticketing systems, and email with no unified index or semantic lookup. Manual methods miss worn labels, damaged parts, and subtle visual differences, leading to wrong parts orders and repeat visits.

The objective: Auto-identify ≥80% of photographed parts with ≥90% accuracy on SKU matching and ≥85% on specifications; achieve reliable feature detection for ≥70% of cases including worn, damaged, or partially visible parts; process images ≤10MB in under 30 seconds each with ≥50 concurrent jobs; enable visual and keyword search across 100% of processed photos with full audit updates to ERP, FSM, and inventory systems.

Solution: How AIP changed the operating model

Learning and setup

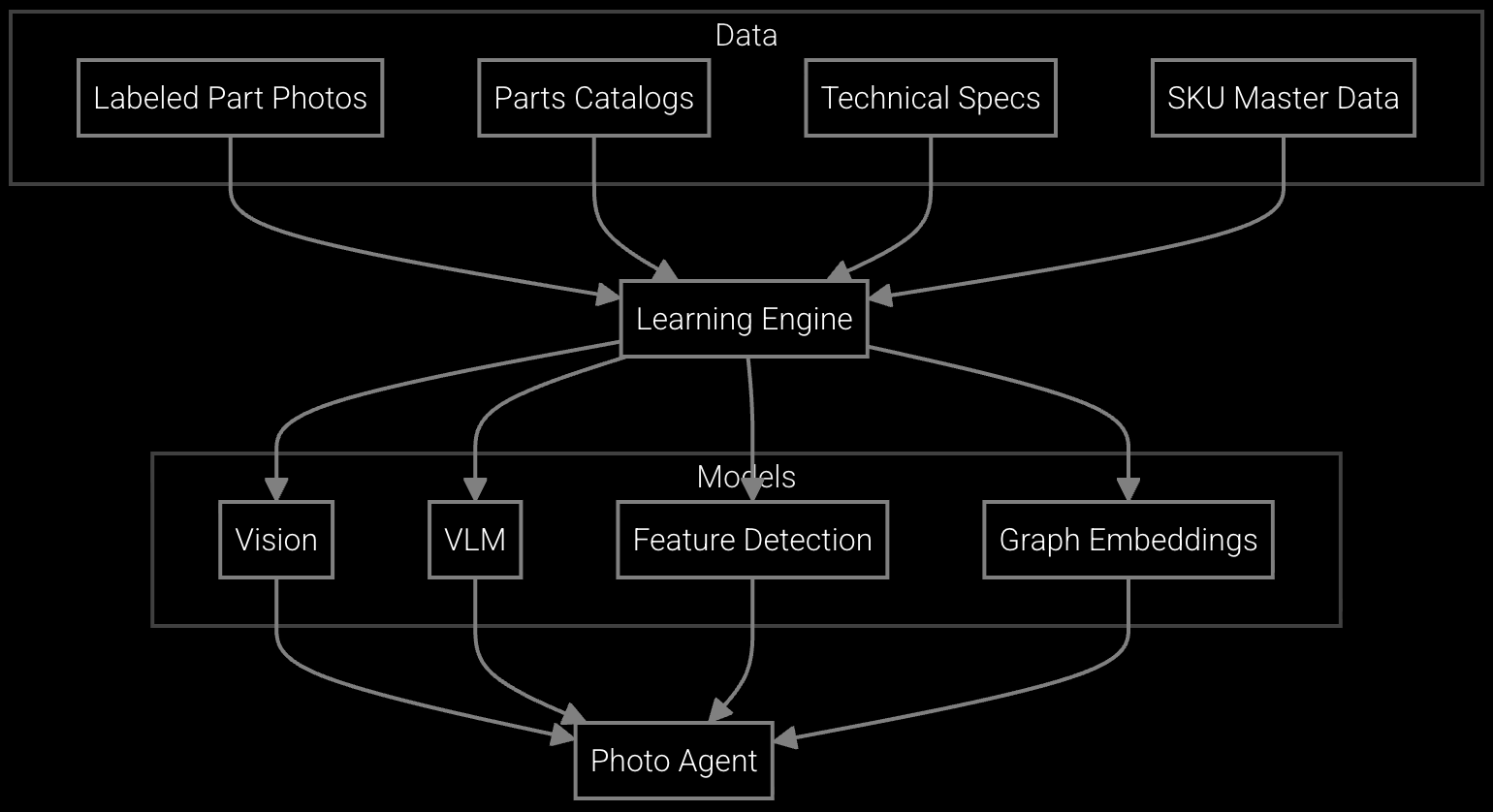

Powered by the Aftermarket Intelligence Platform, the agentic solution applied vision, vision language models, feature detection, and graph embeddings. Training data came from labeled part photos, parts catalogs with images, technical specifications, installation manuals, SKU master data, supersession mappings, field photos with technician annotations, error cases, and user corrections. This enabled the AI agent to recognize part shapes and profiles, manufacturer logos and markings, model numbers and serial codes, material types and finishes, connectors and mounting points, wear patterns and damage indicators, dimensional features, and compatibility attributes.

Workflow orchestration

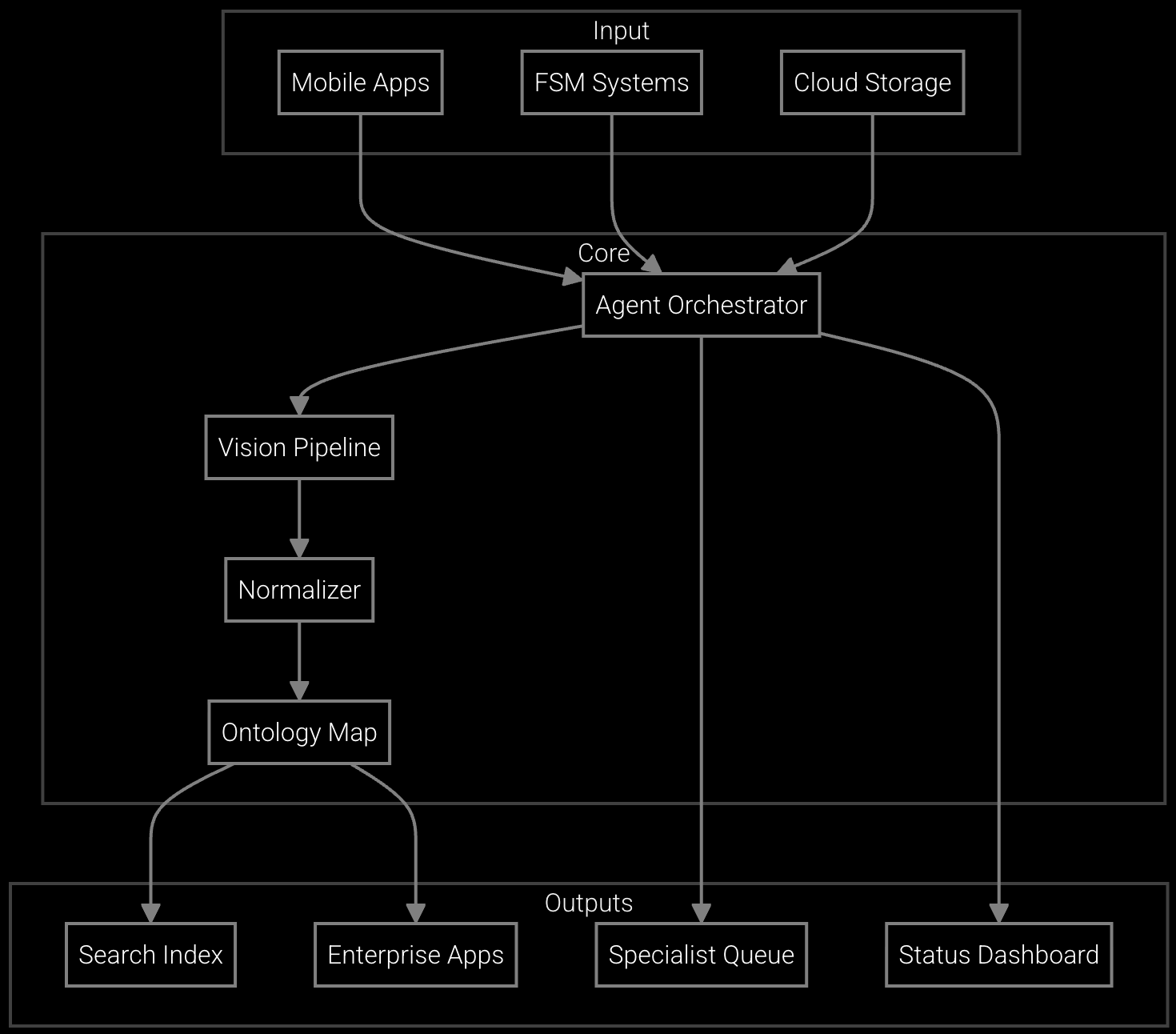

The AI agent ingests from mobile apps, FSM systems such as ServiceNow or Salesforce Field Service, email attachments, or cloud storage; routes images to vision pipelines; normalizes outputs; maps entities to the ontology; writes structured metadata back to ERP, FSM, and inventory systems; indexes results for visual and keyword search; raises low-confidence matches to specialists; and posts status to dashboards.

Execution and resolution

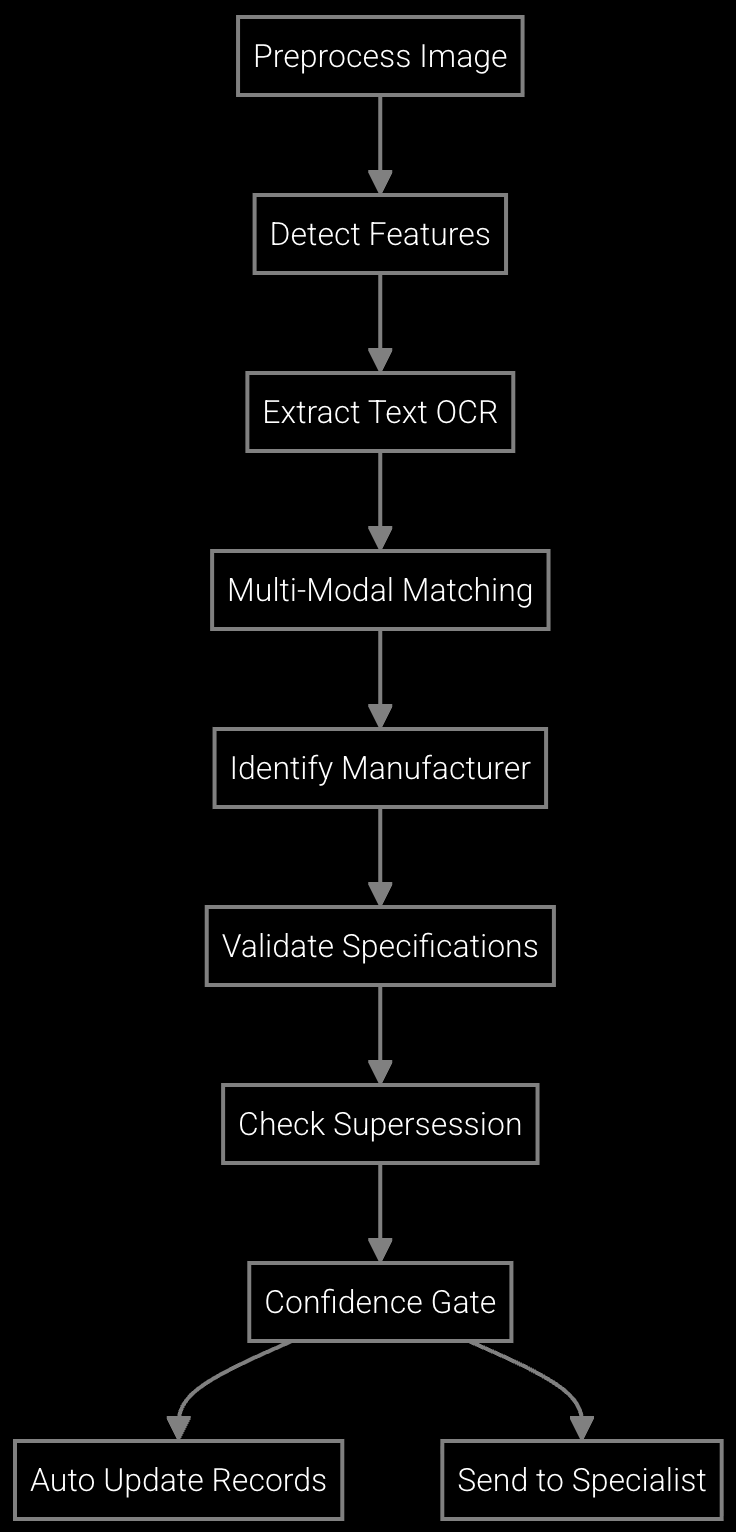

The AI agent preprocesses images (rotation correction, lighting normalization, background removal), detects key features and landmarks, extracts visible text and markings via OCR, matches against parts catalog using multi-modal embeddings, identifies manufacturer and model from logos, validates specifications and dimensions, checks supersession chains, assigns confidence scores, and exports structured records with matched SKUs. Responses complete in under 30 seconds per image with parallel batch processing; updates post to connected systems. Exceptions such as heavily worn parts, obscured markings, poor lighting conditions, or ambiguous matches route to specialists with full context and similar candidates.