Legacy machinery spanning decades demands asset tracking that won't trap your team in vendor lock-in or force-fit generic solutions.

Industrial OEMs face a choice: build asset tracking systems in-house or adopt vendor platforms. The optimal approach combines API-first architecture with pre-built models—enabling customization without rebuilding foundation capabilities, while maintaining data sovereignty and avoiding lock-in.

In-house development of asset tracking, configuration management, and predictive analytics requires years of ML engineering effort. Most industrial OEMs lack the specialized talent pool to build and maintain these systems.

Closed platforms trap proprietary equipment data in black-box systems. When vendor roadmaps diverge from your needs, migration becomes prohibitively expensive and data extraction nearly impossible.

Legacy equipment with decades-long lifecycles generates data from PLC, SCADA, and IoT sensors in incompatible formats. Most vendor platforms expect modern, standardized telemetry streams that don't exist in industrial environments.

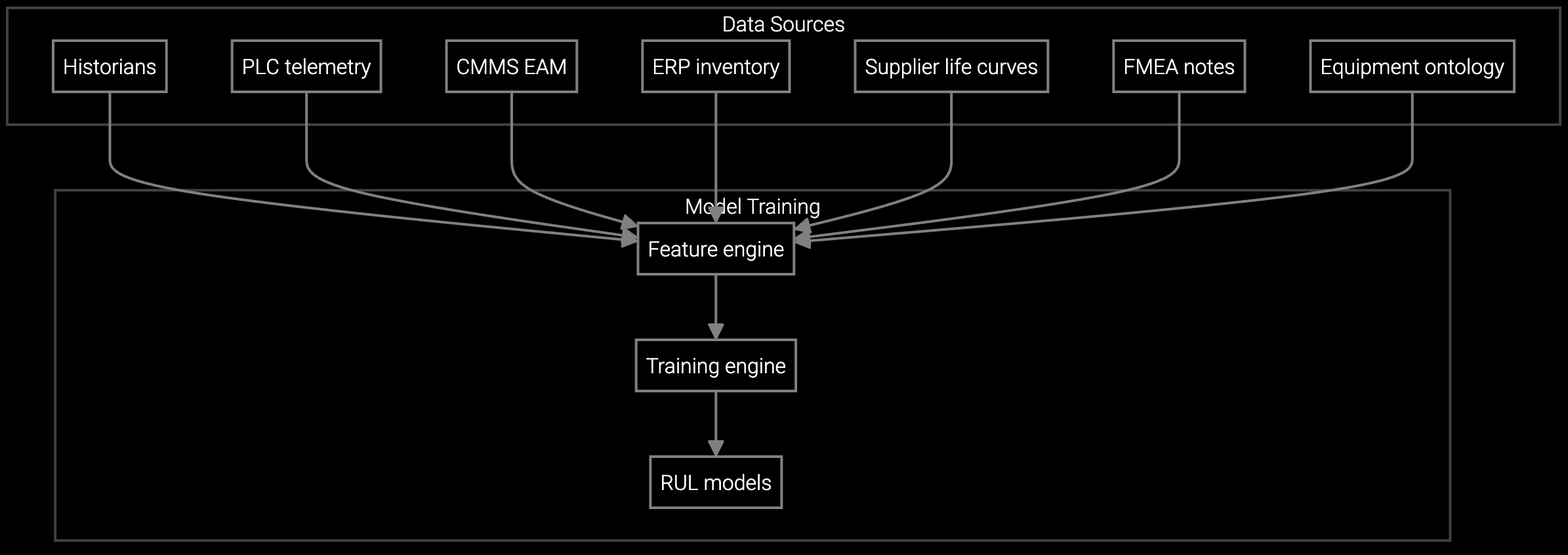

Bruviti's API-first platform resolves the build-versus-buy dilemma by separating data control from model training. Your team owns the asset registry, configuration data, and telemetry streams—stored in your infrastructure or cloud tenant. The platform provides pre-trained models for failure prediction, lifecycle analysis, and anomaly detection through RESTful APIs and Python SDKs.

This headless architecture means your developers integrate installed base intelligence into existing service applications without rearchitecting core systems. When requirements change, you extend the platform using standard languages (Python, TypeScript) rather than vendor-specific scripting. Data sovereignty remains absolute—every query, prediction, and insight flows through your controlled endpoints.

Analyzes IoT telemetry from pumps, compressors, and turbines to identify anomalies before they escalate into unplanned downtime.

Estimates component lifespan for CNC machines and automation systems based on run hours and operating conditions.

Schedules maintenance based on actual equipment condition rather than fixed intervals, reducing unnecessary interventions.

Industrial equipment lifecycles extend 10-30 years, far beyond typical software refresh cycles. A CNC machine installed in 2005 may still generate revenue in 2035, but its configuration data, parts history, and maintenance records often live in fragmented systems—or worse, in spreadsheets maintained by retiring engineers.

The platform ingests this heterogeneous data through connectors for SAP, Oracle, custom data lakes, and even CSV exports from legacy systems. Once unified, the asset registry becomes the foundation for predictive analytics, EOL planning, and contract renewal optimization—without forcing migration to a monolithic vendor ecosystem.

The platform operates in headless mode—all asset data, configuration records, and sensor telemetry remain in your infrastructure or designated cloud tenant. API calls pass data for inference but do not persist it in vendor-controlled storage. You retain full export capabilities and can terminate services without data migration barriers.

Monolithic platforms require proprietary data models and force-fit workflows. API-first design means your service applications call prediction endpoints using standard REST or Python SDKs. If you later build in-house models or switch vendors, you replace the endpoint—not the entire application stack.

Yes. The platform includes connectors for Modbus, OPC-UA, and CSV export workflows common in industrial environments. For equipment predating digital integration, the system accepts manual uploads or batch imports, then normalizes data for predictive modeling.

The Python SDK exposes model endpoints, asset registry queries, and lifecycle event triggers as standard libraries. Your developers can build custom dashboards, automate configuration audits, or train domain-specific models using familiar tooling—no proprietary scripting required.

Most industrial OEMs run 90-day pilots targeting a single product line (e.g., compressors or CNC machines). Key metrics include contract attachment rate improvement, configuration drift reduction, and MTBF increase. Pilots typically require 6-8 weeks for integration and 4-6 weeks of data collection before demonstrating measurable impact.

Software stocks lost nearly $1 trillion in value despite strong quarters. AI represents a paradigm shift, not an incremental software improvement.

Function-scoped AI improves local efficiency but workflow-native AI changes cost-to-serve. The P&L impact lives in the workflow itself.

Five key shifts from deploying nearly 100 enterprise AI workflow solutions and the GTM changes required to win in 2026.

See how API-first design preserves data sovereignty while accelerating time to value.

Review Technical Documentation