Hyperscale customers demand 99.99% uptime SLAs—your contact center strategy determines whether agents resolve or escalate.

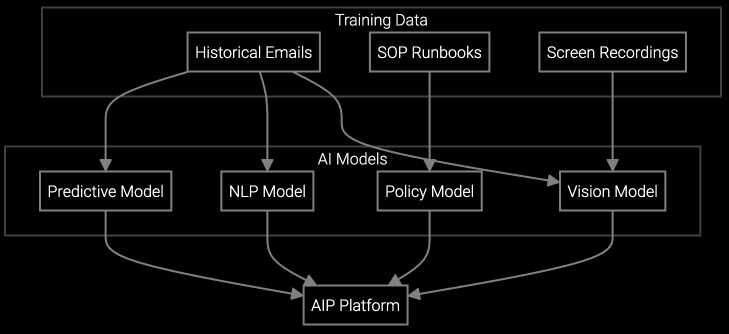

Data center OEMs should adopt a hybrid approach: buy pre-trained models for common agent workflows (case routing, triage, knowledge retrieval) while building custom integrations for telemetry analysis and equipment-specific diagnostics using API-first platforms that prevent vendor lock-in.

Hyperscale deployments generate exponentially more support tickets as fleets scale. Agents can't keep up with manual case classification and routing across thousands of server nodes, storage arrays, and cooling systems.

Agents switching between BMC interfaces, IPMI logs, RAID documentation, and vendor knowledge bases waste critical seconds on every call. Data center customers expect instant resolution for thermal alerts, drive failures, and power events.

Different agents give different answers for identical power supply failures or thermal anomalies. Without AI-guided resolution paths, newer agents miss critical diagnostic steps, resulting in repeat contacts and eroded customer trust.

Pure build-from-scratch approaches delay time to value by 18-24 months while your contact center drowns in tickets. Pure buy strategies lock you into rigid workflows that can't parse BMC telemetry or IPMI event codes specific to your server models.

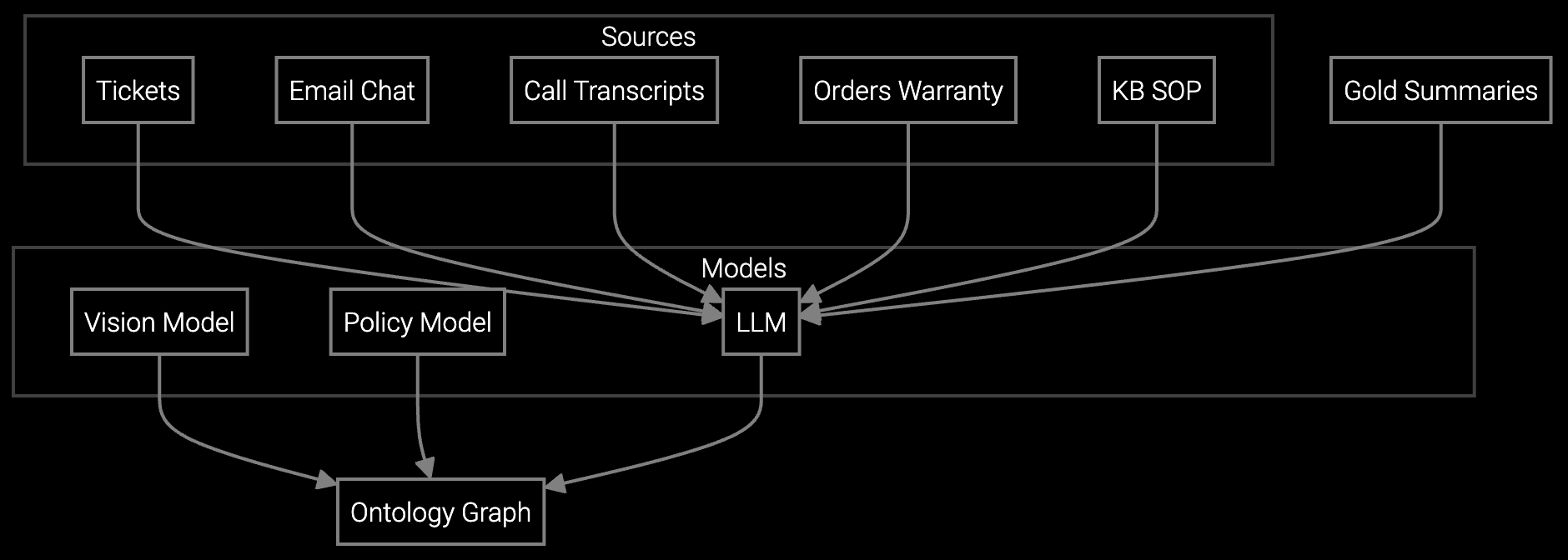

The optimal path combines pre-trained AI for universal agent tasks with custom models for equipment-specific diagnostics. Bruviti's platform delivers instant case routing and knowledge retrieval out-of-the-box while exposing APIs to train custom models on your historical RAID failure patterns, thermal alerts, and power distribution events. Agents get faster answers today while you retain full control over proprietary diagnostic logic tomorrow.

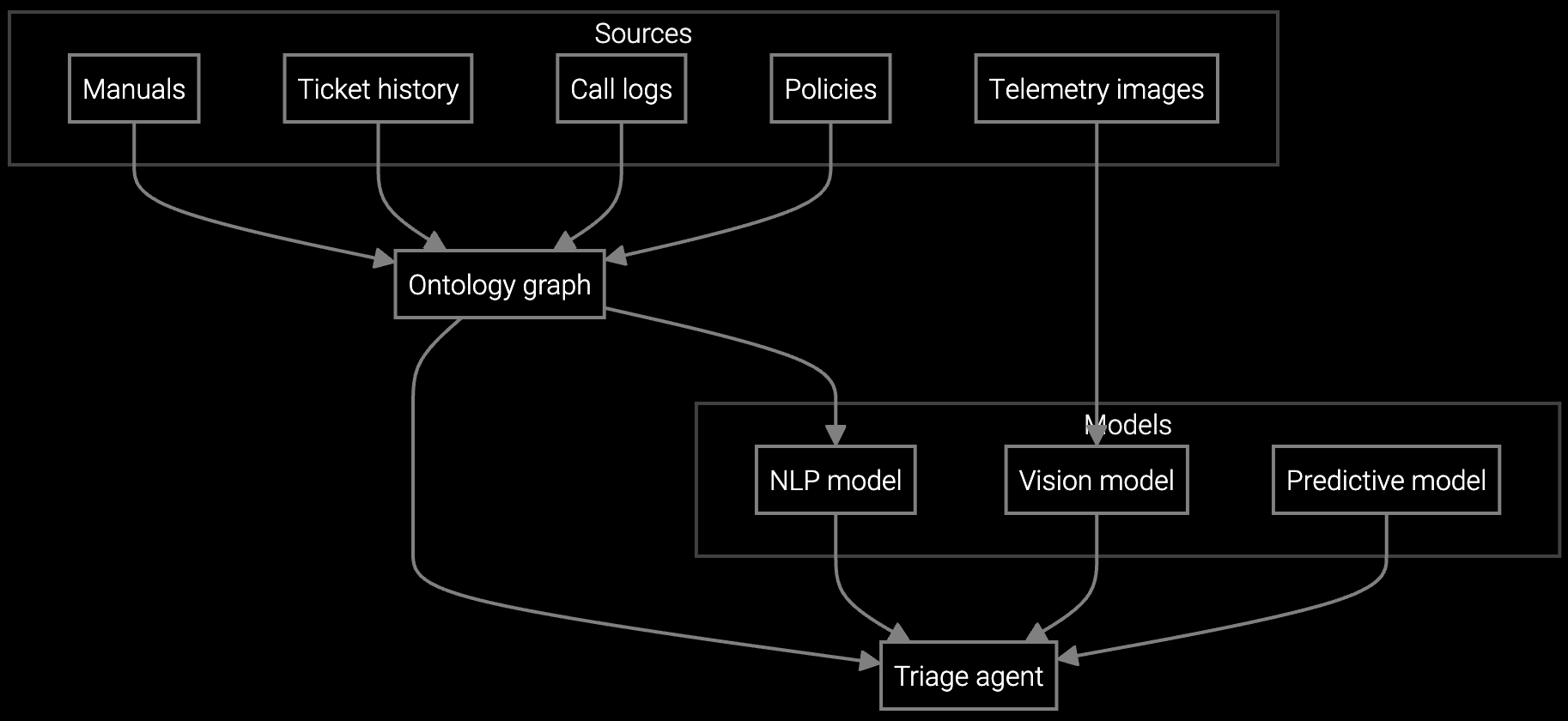

Autonomous case classification for data center equipment correlates BMC alerts, IPMI events, and thermal sensor data to route power, cooling, and compute issues to specialized teams with diagnostic context.

Instantly generates case summaries from hyperscale customer email threads, BMC log exports, and chat transcripts so agents understand multi-node failure patterns without reading 50+ messages.

AI reads data center customer emails reporting drive failures or thermal alerts, classifies by urgency based on PUE impact, and drafts responses using historical resolution data and parts availability.

Hyperscale customers operate at a scale where generic contact center AI fails. Your agents need instant access to BMC telemetry for specific server SKUs, RAID rebuild status for storage arrays, and real-time PUE impact calculations for cooling failures. Off-the-shelf platforms can't parse IPMI event codes or correlate thermal anomalies with hot aisle configurations.

A hybrid strategy lets you deploy proven agent workflows immediately while training custom models on your equipment's unique failure signatures. Start with AI-powered case routing and knowledge retrieval to cut handle time on common issues, then layer in custom telemetry analysis for complex multi-node failures that differentiate your support quality from competitors.

Pre-trained agent assist models deploy in 4-6 weeks for case routing and knowledge retrieval. Custom telemetry analysis models require 12-16 weeks to train on historical BMC data, IPMI logs, and thermal patterns. Most OEMs phase rollout by starting with common server issues before tackling complex storage or cooling diagnostics.

API-first platforms ingest BMC telemetry streams and IPMI event logs to train custom models on your equipment's failure signatures. The platform learns your specific drive replacement thresholds, thermal alert patterns, and power distribution anomalies over 8-12 weeks of historical data analysis.

Proprietary platforms trap you in multi-year contracts with limited export options for trained models. Open API architectures let you extract case routing logic, knowledge base embeddings, and diagnostic models if you ever switch platforms. Evaluate contract terms for model portability and data ownership before committing.

Deploy generic agent workflows first to reduce handle time on 60-70% of cases within weeks. Run custom model training in parallel on equipment-specific diagnostics. This phased approach delivers immediate ROI while building differentiated capabilities for complex thermal, power, and multi-node failure scenarios.

Frontline agents identify which workflow steps consume the most time and which knowledge gaps cause repeat contacts. Their input ensures the platform solves real problems like BMC log interpretation or parts availability lookups rather than automating tasks agents already handle efficiently.

Understanding and optimizing the issue resolution curve.

Part 1: The transformation of IT support with AI.

Part 2: Implementing AI in IT support.

See how Bruviti delivers pre-trained agent workflows with full API access for custom telemetry models.

Schedule Strategy Session