Purpose-built small models deliver the precision that 95% of general LLM-based enterprise projects couldn’t achieve.

The Enterprise AI Reality Check

MIT’s The GenAI Divide: State of AI in Business 2025 reports a stark outcome: 95% of enterprises saw zero return on $35–40B in AI investments, with only 5% of custom tools reaching production.1

As the report summarizes: “Most GenAI systems do not retain feedback, adapt to context, or improve over time.” Leaders consistently report tools that are fine for drafts but don’t carry forward the client and context-specific learning operations require.

The bottom line: general-purpose LLMs trained on public data are strong on breadth, but they consistently fall short when deep, specialized domain expertise is required.

Purpose-Built Small Models Are Built for Precision, Not Conversation

How They Outperform General LLMs

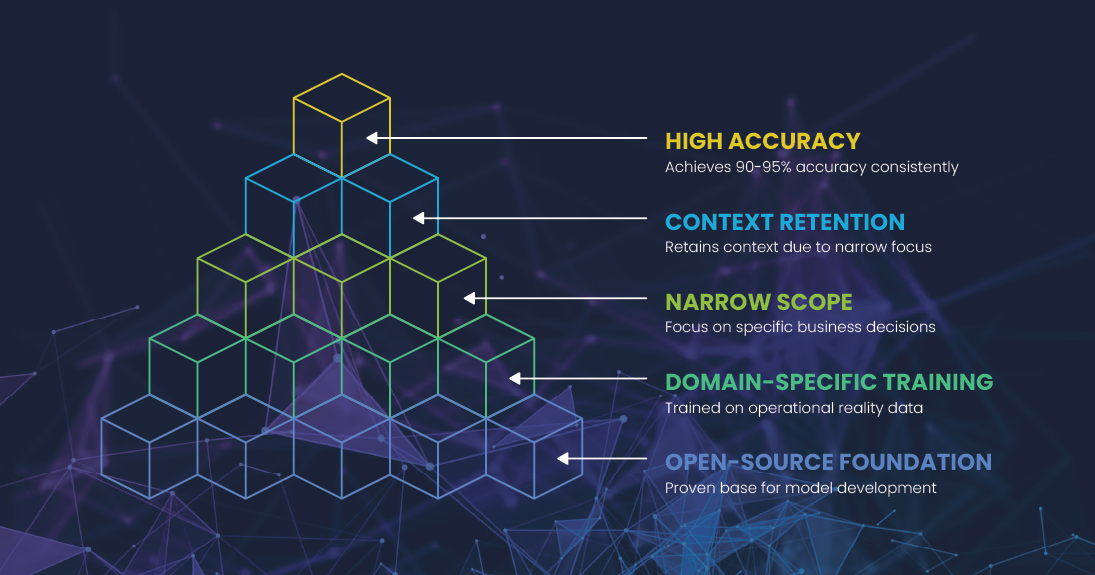

Purpose-built small models start with proven open-source foundations. They are then trained exclusively on your operational reality: parts catalogs, failure patterns, warranty matrices, and diagnostic histories. They’re not trying to chat about everything. They’re built to nail specific decisions your business depends on.

Context Is King

Small models retain context because their scope is deliberately narrow. They accumulate expertise in your domain rather than spreading knowledge thin across every possible topic. The result: accuracy on deep, complex product questions consistently reaches 90–95%, compared to about 60% from general LLMs. They also adapt three to ten times faster when rules change.

These models excel where complexity lives: equipment diagnostics, warranty determinations, and parts sourcing across thousands of SKUs. They understand that “Model X-470 bearing assembly, warranty clause 15.2b” means something specific, not just words to process.

Purpose-Built Models Require a Purpose-Built Platform

Developing purpose-built small models isn’t just about algorithms. It requires an environment where data, actions, and results can be continuously managed and improved in a closed loop. Because these models are narrow in focus, a platform where they can link to other models, small and large, is critical to applying them across broader workflows.

Key platform requirements include:

| Platform Capability | What It Provides | Why It’s Critical |

| Domain Knowledge Foundation | Pre-mapped relationships between assets, parts, failures, policies | Models start with expertise embedded, not learning basics from scratch |

| Reasoning Architecture | Decision frameworks tuned for operational precision | Enables consistent, explainable decisions under pressure |

| Secure Training Environment | In-house deployment where proprietary data stays proprietary | Models learn from your intelligence without exposing it externally |

These capabilities allow new models to be purpose-built rapidly. They use structured domain knowledge, proven reasoning patterns, and secure training in your own environment where your data lives.

The Aftermarket Intelligence Platform

At Bruviti, our Aftermarket Intelligence Platform transforms years of diagnostic decisions, parts compatibility discoveries, and warranty interpretations into agentic workflow automation. The platform provides governed domain knowledge graphs, reasoning frameworks tuned for operational precision, and secure in-environment training. This enables purpose-built models to be deployed in days, not months.

Proof Point: Complex Enterprise Service Operations

A global medical equipment manufacturer’s service teams relied on a knowledge agent powered by a general LLM for parts compatibility queries. Accuracy was frustratingly inconsistent, especially for complex rules and deep product knowledge.

The LLM was replaced with a purpose-built small model trained exclusively on their parts catalog, compatibility matrices, warranty terms, and ten years of service decisions.

The results were immediate. The same agent interface now delivered precise, instant answers: exact replacement parts across equipment generations, configurations, and compatible alternatives from preferred suppliers. Accuracy jumped to over 90%, and service teams trusted the results.

Three Principles to Carry Forward

- Do you control the data that makes models accurate?

Purpose-built small models achieve 90%+ accuracy because they’re trained on your specific operational data. That includes your parts matrices, diagnostic patterns, failure histories, and policy interpretations. This means your most valuable competitive intelligence must stay in your environment during training and operation. Data sovereignty isn’t just compliance, it’s competitive advantage. - Are you starting narrow enough to prove real value?

Choose one specific decision that costs you when it’s wrong, such as parts compatibility, warranty determination, or diagnostic triage. Deploy a specialized model for that single use case. Measure accuracy, speed, and error reduction against current processes. Only when that model consistently outperforms human decisions should you expand scope. Breadth follows depth, not the other way around. - Do you have a platform to deploy at scale?

The platform that enabled your first model should accelerate development of adjacent models. For example, moving from parts compatibility to warranty processing to diagnostic support. Without reusable foundations, each new model becomes a separate project. Ensure that your domain models are built and reside in a platform with the capabilities and domain knowledge to scale rapidly across operational domains.

FAQs

What is a small language model (SLM)?

A purpose-built model trained on curated domain data to solve specific operational challenges, rather than attempting broad general knowledge. It starts from a capable base model and gets specialized for tasks like parts compatibility or diagnostic triage.

How is a purpose-built model different from a fine-tuned LLM?

Fine-tuning adds domain knowledge to a general system. Purpose-built small models are designed for narrow scope from the start. They use constrained purpose, explicit decision rules, and task-specific evaluation. The result is higher precision and faster adaptation for operational decisions.

Do specialized models replace our existing AI tools?

Keep general AI for broad tasks and conversation. Route specific operational decisions to domain-specialized models for accuracy and consistency. Purpose-built model decisions can also be fed back into general conversational agents to deliver explanations and next-step guidance.

- MIT NANDA, The GenAI Divide: State of AI in Business 2025. Aug 2025 ↩︎