Incomplete asset data costs hyperscale operators millions in unplanned downtime—build tracking that scales.

Integrate asset tracking through REST APIs and Python SDKs. Connect BMC/IPMI telemetry streams to your asset registry, track configuration drift, and build custom lifecycle rules without vendor lock-in.

BMC telemetry, provisioning systems, and DCIM tools each track different asset attributes. Building a unified view requires parsing multiple vendor-specific formats and reconciling conflicts across systems.

Firmware versions, BIOS settings, and RAID configurations change without updating central records. Detecting drift requires continuous polling and comparison logic that's expensive to build from scratch.

Tracking millions of servers across distributed facilities demands real-time updates without overloading network or database infrastructure. Custom implementations often fail at hyperscale volumes.

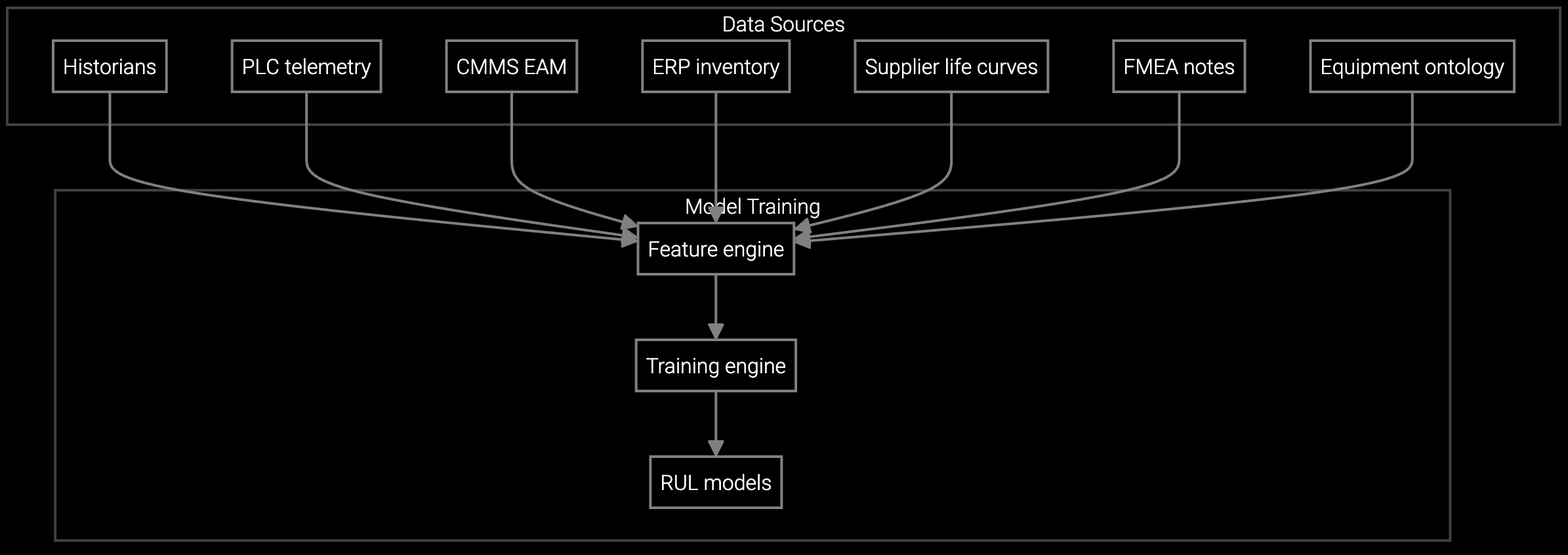

Bruviti's asset tracking APIs provide a headless integration layer between hardware telemetry sources and your existing asset registry. The Python SDK consumes BMC/IPMI streams, normalizes vendor-specific data formats, and exposes a unified schema for configuration state. You write custom lifecycle rules in Python—no proprietary scripting languages or vendor-specific plugins.

The platform handles the heavy lifting: telemetry ingestion at scale, conflict resolution when multiple sources report different states, and incremental updates to minimize network overhead. You control data ownership—export to your data lake, integrate with SAP or Oracle, or build custom dashboards without extracting data through vendor portals. Anti-lock-in by design.

Build virtual models of server fleets that mirror real-time BMC telemetry, enabling proactive maintenance before thermal or power anomalies cause outages.

Analyze IoT streams from PDUs, cooling systems, and compute nodes to identify correlated failures across racks and predict cascading issues.

Estimate when SSDs, DIMMs, and power supplies will fail based on usage patterns, enabling planned maintenance windows that avoid customer-facing downtime.

Data center OEMs manage asset complexity across compute (blade servers, rack servers), storage (SAN arrays, NAS appliances, HCI clusters), and infrastructure (UPS units, PDUs, cooling systems). Each product line generates telemetry through different protocols—BMC/IPMI for servers, SNMP for network-attached storage, proprietary APIs for cooling equipment.

The asset tracking API normalizes these streams into a unified configuration model. Firmware versions, BIOS settings, RAID states, and thermal profiles become queryable attributes regardless of underlying hardware vendor. Custom Python scripts trigger lifecycle actions—flag servers nearing EOL, identify upgrade candidates for new firmware, or surface configuration inconsistencies across redundant systems.

The platform natively ingests BMC/IPMI (Redfish and legacy IPMI), SNMP v2/v3 for network-attached devices, and REST APIs from common DCIM vendors like Schneider and Vertiv. Custom connectors for proprietary protocols can be built using the Python SDK with full access to raw telemetry streams.

The platform maintains a versioned configuration snapshot for each asset and compares incoming telemetry against the last known state. Only deltas are stored and transmitted, reducing database overhead by 80% compared to full-state snapshots. Drift alerts trigger when critical attributes—firmware version, BIOS settings, RAID config—deviate from policy.

Yes. The API supports bulk export to S3, Azure Blob Storage, or Google Cloud Storage in Parquet or JSON format. Real-time change streams can be pushed to Kafka or Kinesis for downstream analytics. All data remains under your control—no vendor portal required for access.

For products with standard BMC/IPMI telemetry, integration takes 1-2 weeks: API authentication, telemetry mapping, and basic lifecycle rules. Custom protocols or complex configuration schemas add 2-4 weeks for SDK development. Most OEMs pilot with one high-volume SKU before expanding.

You write integration logic in Python using standard libraries, not proprietary scripting languages. Data flows are controlled via API calls you own, not locked inside a vendor UI. Export formats are open (JSON, Parquet), and the headless architecture means switching analytics or visualization layers doesn't require re-ingesting data.

Software stocks lost nearly $1 trillion in value despite strong quarters. AI represents a paradigm shift, not an incremental software improvement.

Function-scoped AI improves local efficiency but workflow-native AI changes cost-to-serve. The P&L impact lives in the workflow itself.

Five key shifts from deploying nearly 100 enterprise AI workflow solutions and the GTM changes required to win in 2026.

Get API credentials and Python SDK documentation. Integration sandbox available immediately.

Access Developer Portal