Legacy routers reach EOL faster than procurement cycles, leaving your customers with unserviceable equipment.

Build a custom parts obsolescence tracking system that integrates with your existing ERP using Bruviti's Python SDKs and REST APIs to forecast EOL parts demand without vendor lock-in.

Network equipment manufacturers discontinue router and switch models every 3-5 years, but your ERP doesn't flag which SKUs are approaching EOL until stockouts force emergency last-time buys.

Engineers maintain substitute parts lists in spreadsheets outside the ERP, creating version control nightmares when power supply or firmware changes break backward compatibility.

Your demand forecasting model assumes linear part consumption, but network equipment failures spike predictably based on firmware age and MTBF curves your data lake already contains.

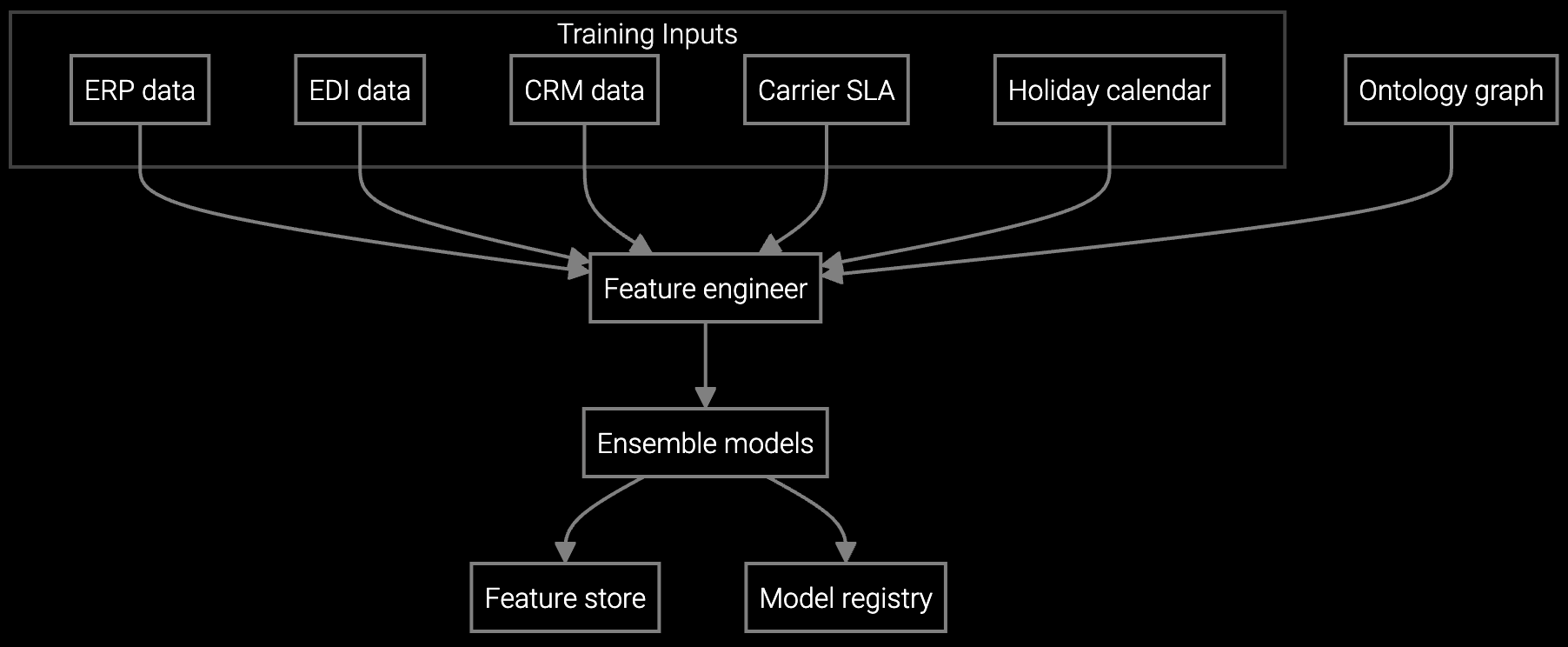

The platform provides Python SDKs and REST APIs that let you ingest product lifecycle data from supplier feeds, correlate it with your installed base telemetry, and generate EOL risk scores per SKU without replacing your ERP. You train models on your own historical RMA patterns and MTBF curves, then deploy them in your existing data pipeline using standard Docker containers.

The architecture is API-first and headless. Authentication uses OAuth2 tokens you control. Model inference runs on your infrastructure or ours. Data never leaves your VPC unless you explicitly configure cloud sync. You write the integration code in Python or TypeScript, version it in your repo, and deploy it alongside your existing microservices. No proprietary runtime, no vendor-specific DSL, no black box retraining cycles that lock you in.

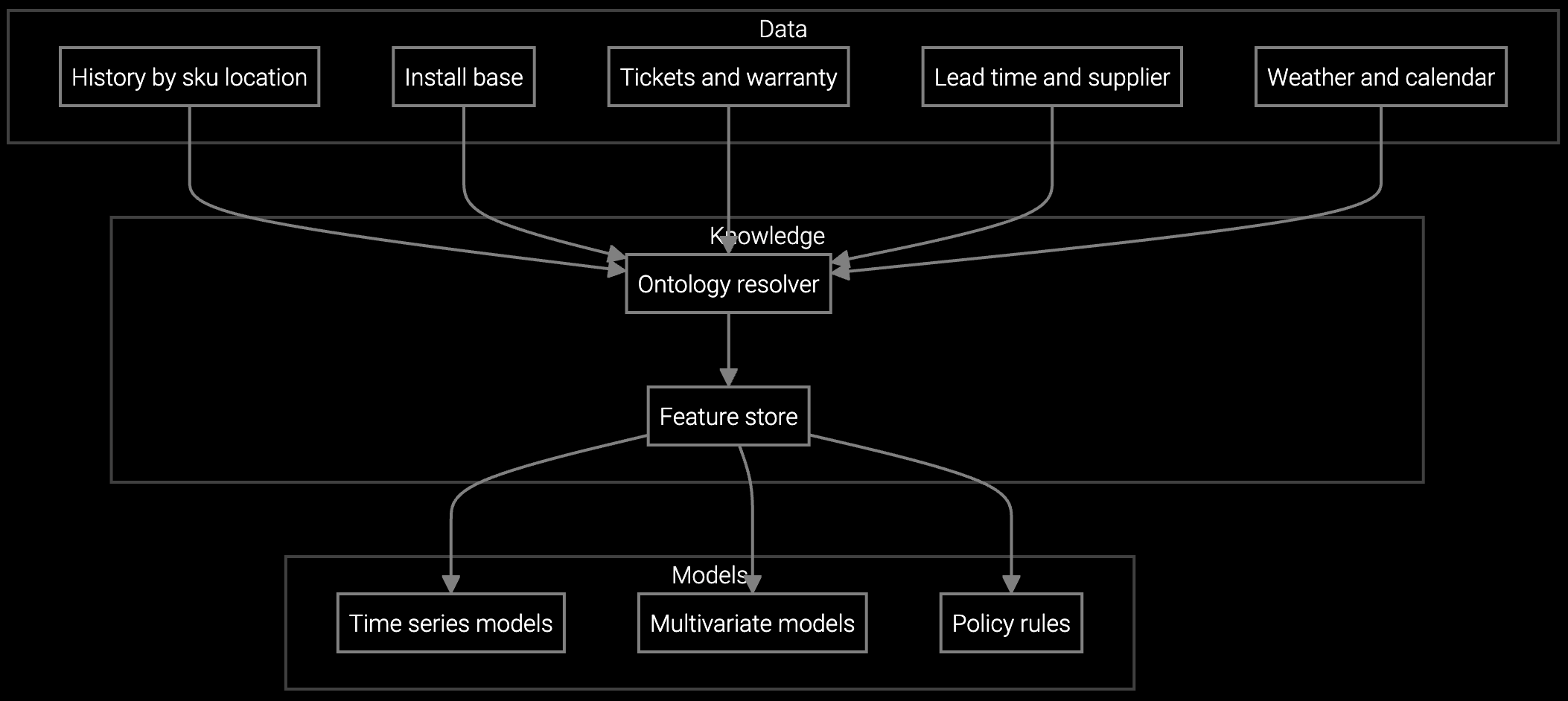

Forecast router and switch part demand by NOC location and firmware version, optimizing stock levels ahead of CVE patch cycles.

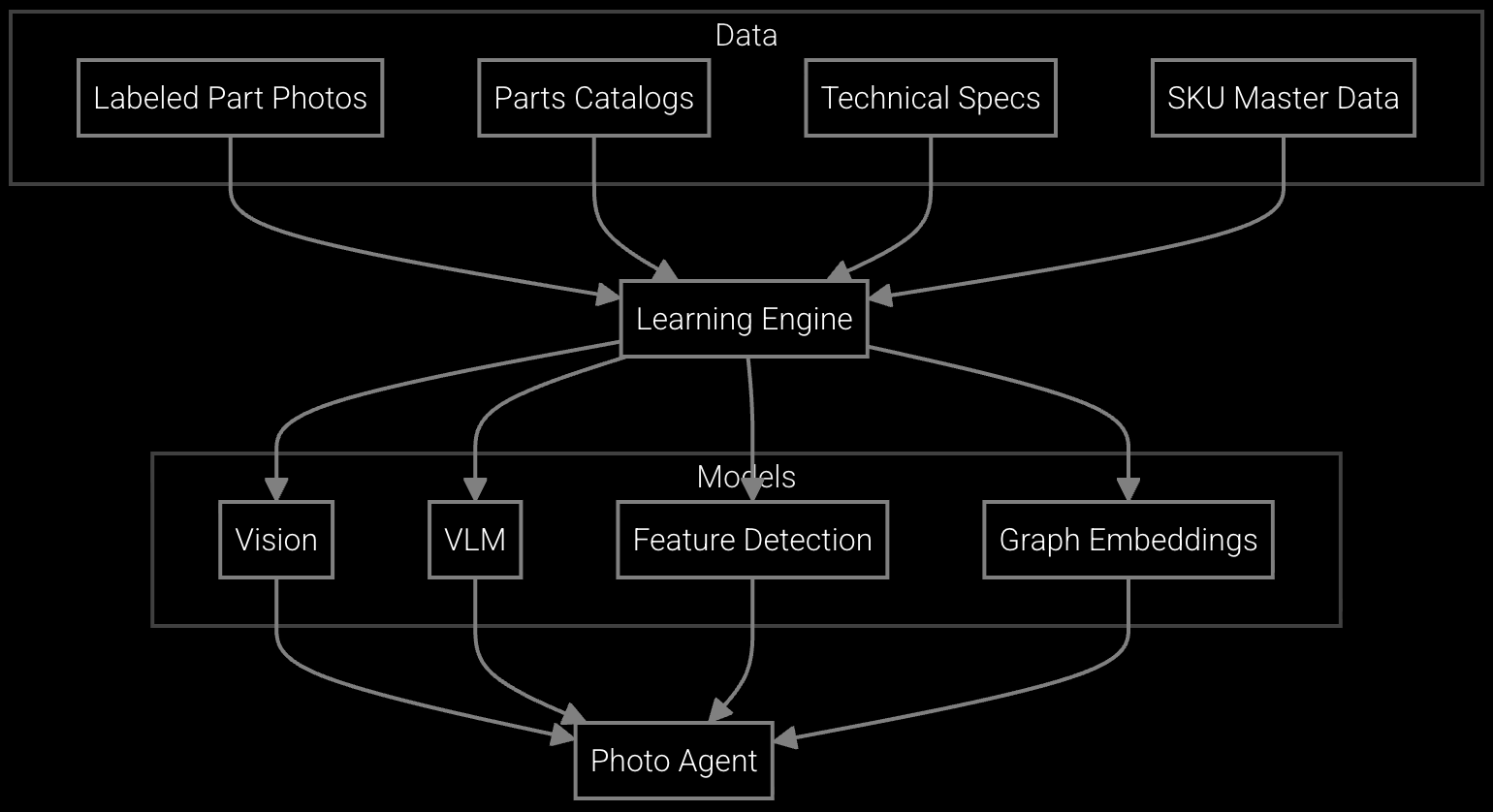

Field engineers snap a photo of a failed firewall module and instantly retrieve part number, EOL status, and substitute availability.

Project power supply consumption for carrier-grade switches based on installed base age, thermal telemetry, and seasonal traffic patterns.

Network equipment customers expect 10-15 year operational lifespans, but component manufacturers discontinue chipsets and optics every 3-5 years. A carrier-grade router contains 200+ field-replaceable units sourced from a dozen suppliers, each with independent EOL cycles. Your customers commit to five-nines SLAs that require you to stock service parts for products you stopped manufacturing years ago.

The platform ingests SNMP trap data, firmware version telemetry, and temperature sensor logs from your installed base to identify which devices are approaching failure-prone age thresholds. It correlates that risk profile with supplier EOL notices and historical RMA patterns to forecast which SKUs will spike in demand before your competitors buy out remaining inventory.

Use platforms that provide Python or TypeScript SDKs with Apache 2.0 licensing, expose REST APIs for all model operations, and let you deploy trained models in your own Docker containers. Verify that you retain ownership of training data and model weights, and that inference can run on your infrastructure without proprietary runtimes.

Yes. The platform provides retraining pipelines that ingest your historical RMA records, installed base telemetry, and supplier lifecycle feeds. You control the training schedule, feature selection, and validation metrics. Model weights are versioned in your environment, and you can roll back to previous versions if forecast accuracy degrades.

The API supports webhook integration with supplier product lifecycle management systems and can parse EOL notices from email or PDF feeds. You configure the data sources, and the system extracts EOL dates, recommended substitutes, and last-time-buy windows. This data is correlated with your bill-of-materials to flag at-risk assemblies before stockouts occur.

The forecasting model monitors telemetry streams in near real-time and detects anomalies in error rate or temperature patterns that precede increased RMA volume. It automatically adjusts demand projections and flags inventory risk. You can configure alert thresholds and integrate with your existing incident management tools via webhooks or API calls.

A pilot integration connecting the REST API to your ERP and a single product line's telemetry typically completes in 3-5 business days. Model training on historical RMA data takes 1-2 weeks depending on dataset size. Production deployment follows standard DevOps practices using Docker and Kubernetes, with no special runtime dependencies.

SPM systems optimize supply response but miss demand signals outside their inputs. An AI operating layer makes the full picture visible and actionable.

Advanced techniques for accurate parts forecasting.

AI-driven spare parts optimization for field service.

Get API documentation and sandbox access to test parts forecasting integration with your ERP.

Get API Access