Hyperscale customers demand four-nines uptime while your technician expertise walks out the door.

Data center equipment OEMs face a choice: build custom field service AI using internal teams or buy turnkey solutions. The optimal approach combines API-first platforms with pre-trained models, enabling technical teams to customize without vendor lock-in while accelerating time-to-value.

Building field service AI from scratch requires assembling datasets from IPMI logs, training foundation models, and integrating with FSM systems. Data center OEMs face pressure to reduce MTTR while internal teams spend months on infrastructure before delivering any technician-facing tools.

Turnkey vendor solutions promise fast deployment but assume generic workflows. Data center technicians work across diverse hardware generations, BMC interfaces, and customer SLA requirements. Black-box systems cannot adapt to specialized diagnostics or proprietary telemetry formats without expensive custom development.

Proprietary platforms create long-term dependency on vendor roadmaps and pricing. Data center OEMs need flexibility to extend models with new server generations, integrate emerging telemetry sources like liquid cooling systems, and maintain control over competitive differentiation as service becomes a margin driver.

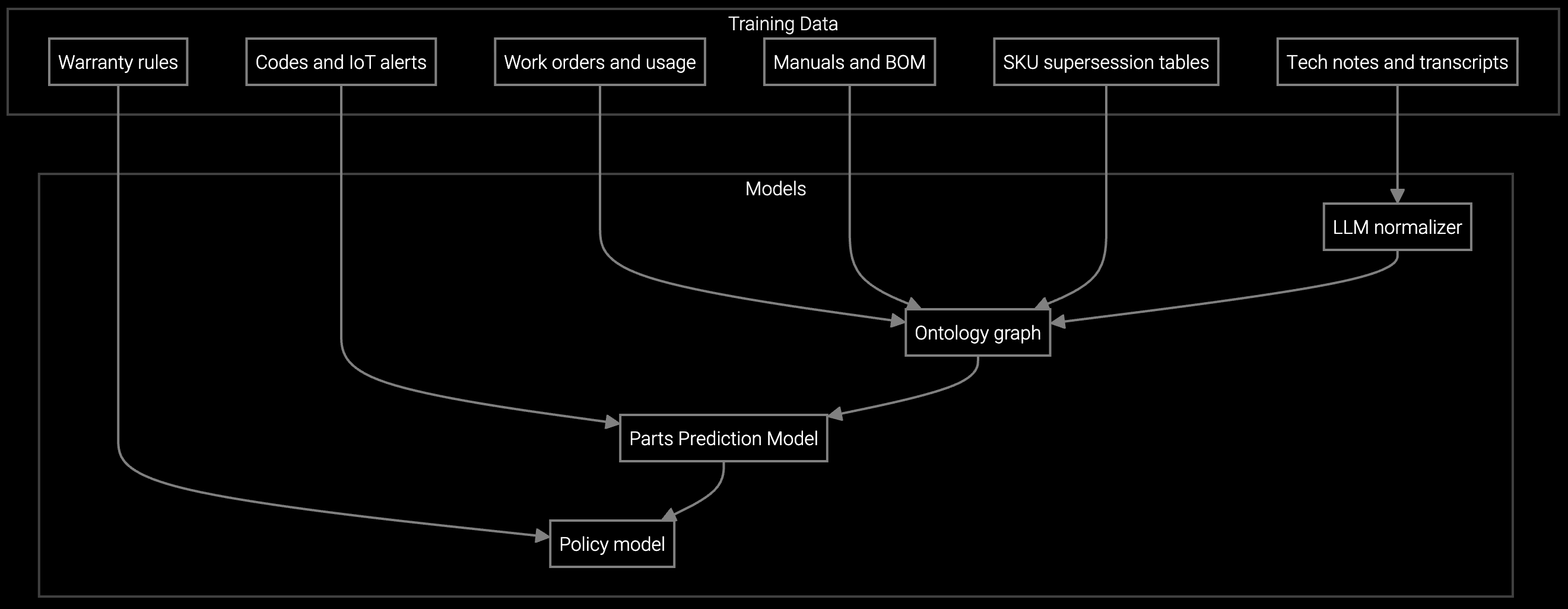

The strategic answer lies in API-first platforms that separate infrastructure from customization. Bruviti provides pre-trained models for common field service patterns—parts prediction, root cause analysis, diagnostic triage—while exposing Python SDKs and REST APIs that let your engineering teams extend, retrain, and integrate without touching the foundation layer.

This hybrid approach delivers immediate value through pre-built capabilities while preserving technical control. Your team writes TypeScript to connect BMC telemetry streams, extends the parts prediction model with proprietary failure data, and builds custom dashboards using standard frameworks. The platform handles the heavy lifting of model training, data pipelines, and scaling infrastructure while you maintain ownership of workflows, data sovereignty, and competitive differentiation.

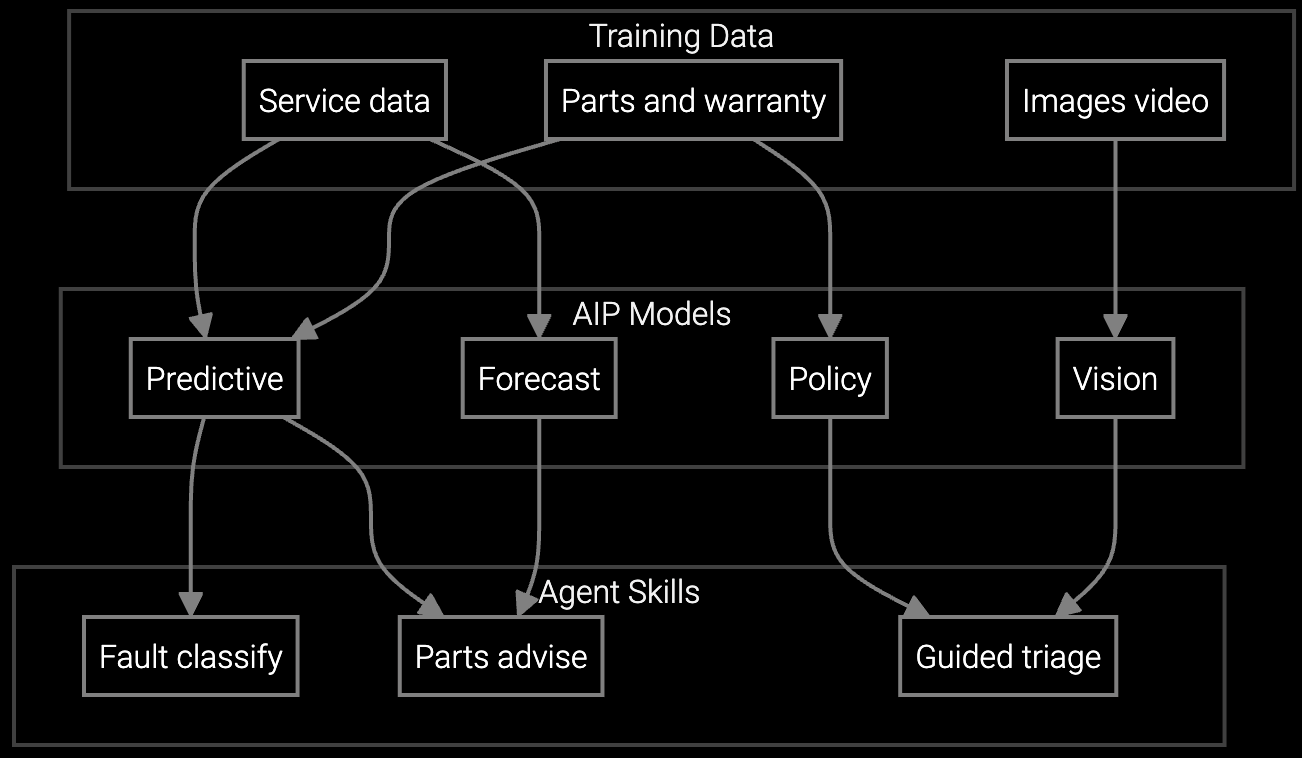

Analyze IPMI logs and BMC telemetry to predict which PSU, memory module, or cooling component will fail, staging parts before dispatch to hyperscale data centers.

Correlate thermal anomalies, firmware logs, and historical failure patterns to identify whether hot aisle configuration or PDU issues drive server shutdowns.

Mobile copilot surfaces RAID rebuild procedures, BMC reset sequences, and SLA context on-site, reducing dependency on senior technicians for complex server configurations.

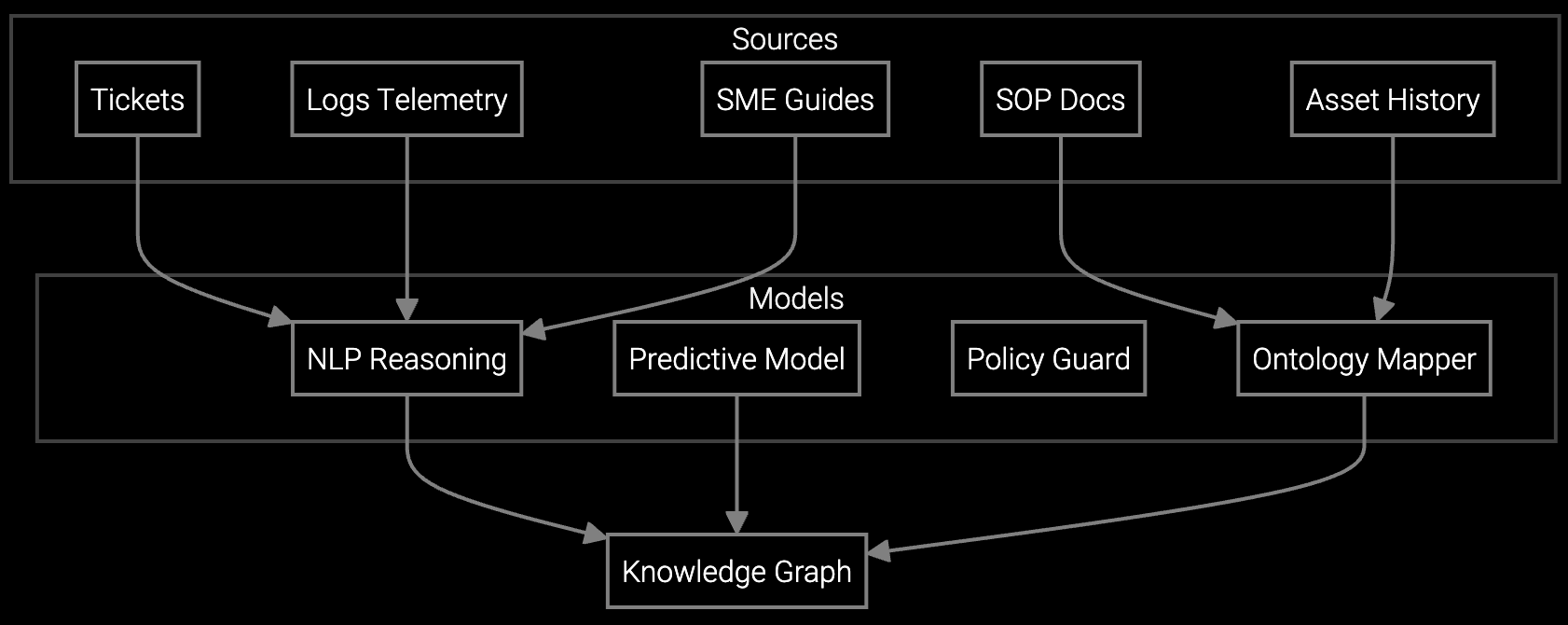

Data center OEMs serve hyperscale customers with custom SLAs, proprietary BMC implementations, and hardware configurations that evolve every 12-18 months. Generic field service platforms assume standardized equipment and workflows, forcing awkward workarounds for liquid cooling diagnostics, multi-vendor RAID analysis, or customer-specific telemetry formats.

Your competitive advantage comes from faster MTTR through better diagnostics and parts staging accuracy. An API-first platform lets your team build on proven foundation models while extending them with proprietary thermal analysis algorithms, custom BMC parsers, and integration with your existing FSM, ERP, and warranty systems. You maintain control over the intellectual property that differentiates your service offering while avoiding the 18-month build timeline for infrastructure.

Bruviti provides Python and TypeScript SDKs for model extension, data pipeline integration, and custom workflow development. REST APIs support any language stack. All interfaces use open standards, allowing your team to work with familiar tools like FastAPI, Node.js, or custom microservices architectures without learning proprietary frameworks.

The platform architecture separates your custom logic from infrastructure. Your extensions—custom models, integration code, business rules—run as standard Python or TypeScript modules that interact via documented APIs. You retain full ownership of training data, model weights, and application code. If you choose to migrate, your custom components port to any environment that supports Python and standard ML frameworks.

Full control over model extension and fine-tuning. The platform provides foundation models pre-trained on general field service patterns. Your team can extend these with proprietary telemetry data, retrain for specific equipment generations, and adjust prediction thresholds based on your SLA requirements. Version control and A/B testing capabilities let you validate model changes before production deployment.

Pure build approaches deliver first production value after 18-24 months. Turnkey vendors promise 3-6 month deployments but often require another 3-6 months of custom integration work. API-first platforms with pre-trained models enable pilot deployment in 4-6 weeks for standard use cases like parts prediction, with customization layers added incrementally as your team validates value and identifies extension opportunities.

Event-driven architectures using message queues work well for real-time BMC telemetry streams. REST APIs handle FSM integration for work order updates and technician context. The platform provides connectors for common protocols like IPMI, Redfish, and SNMP, plus SDK support for building custom parsers for proprietary telemetry formats. Your team controls data flow, transformation logic, and storage locations throughout the pipeline.

How AI bridges the knowledge gap as experienced technicians retire.

Generative AI solutions for preserving institutional knowledge.

AI-powered parts prediction for higher FTFR.

Talk to our technical team about API access, SDK capabilities, and pilot deployment timelines.

Schedule Technical Discussion